This week I resolved to do more snacking. Not of the doughnut kind (tempting as that is) but a thing I have read about called exercise snacking. It’s rather fun. Instead of resolving that anything other than a full-scale workout is a waste of time, the philosophy of snacking advises working small bursts of activity into your daily routine, whatever that is. I decided to experiment with it. So far this week I have done some calf exercises on the bottom stair while my coffee was brewing, some balancing exercises in the kitchen while cooking (there are probably some health and safety issues with this but I’m a grown adult and doing it at my own risk), plus some squats while finishing a drama on Netflix (far less risky, although the cat was pretty weirded out). None of this snacking is replacing my twice-weekly visits to the gymnasium from hell, but they form a picnic hamper of exercise snacks that I can work into my day without making any effortful changes to my everyday lifestyle.

This got me thinking about how the principles of snacking can be applied to studying. As clients will know, I work in half-hour slots and spend a great deal of my time persuading students that short bursts of focused work are far superior to longer periods of dwindling focus. So many students remain convinced that they need huge swathes of time in order to be able to study effectively, when in fact the reverse is true. No matter how much we learn from cognitive science about the limited capacity of our working memory and the shortness of our attention span, most students (and often their parents) remain wedded to the idea that they need a lengthy stretch of time for studying to be worthwhile.

Much of this attitude, of course, stems from good old-fashioned work avoidance. We’ve all done it: pretended to ourselves that we simply don’t have time for something when in fact what we’re doing is manufacturing an excuse to procrastinate whatever it is that we don’t want to do until the mythical day when we will have plenty of time to dedicate to it. You wouldn’t believe how much time I can convince myself is required to clean the bathroom. Part of overcoming this tendency is to call it out: point out to students when they are using their lack of time available simply as an excuse. But there is, I think, also a genuine anxiety amongst many students that they need long stretches of time in order to be able to achieve something. It often surprises them greatly when I inform them not only that much can be achieved in 10, 15 or 20 minutes but that in fact this kind of approach is optimal. It is not a necessary compromise in a busy lifestyle to fit your work into short, focused bursts: it is actually the ideal. The same is true for exercise snacks, for which there is a growing body of evidence that suggests the benefits of these short bursts of exercise can actually outweigh those of longer stretches.

One of the most counter-intuitive findings from cognitive science in recent years has been that regularly switching focus from one area of study to another is actually more effective for learning than spending extended periods of time on one thing. At first, I really struggled with this in the classroom, as all my training had taught me to pick one learning objective and hammer this home throughout the lesson. But up-to-date research-informed teaching advocates for mixing it up, especially in a setting like the school I used to work in where lessons were an hour long. A whole hour on one learning focus is not effective; far better to have one main learning focus plus another completely separate one one to reinvigorate the students’ focus and challenge them to recall prior learning on a completely different topic. I frequently do this whenever possible in my half-hour tutoring sessions, which may have one core learning purpose but with a secondary curve-ball which I throw in to challenge students to recall something we covered the previous week or even some time ago. This kind of switching keeps the mind alert and allows for regular retrieval and recall.

Retrieval snacking is also something that friends and family can help with and that students can and should be encouraged to do habitually. If you’re supporting your child with learning their noun endings, why not ask them randomly during the day to reel off the endings of the 1st declension? This kind of random questioning will pay dividends in the long-run, as it forces a child’s brain to recall their learning on a regular basis and out of context. Nothing could be more effective at cementing something into their longterm memory, which is the greatest gift any student can give themselves in order to succeed. My grandfather (a trained teacher himself) used to do this with me when I was small and was struggling to learn my times tables. “What are nine sevens?” he would yell out at random points during the day and I had to answer. It worked.

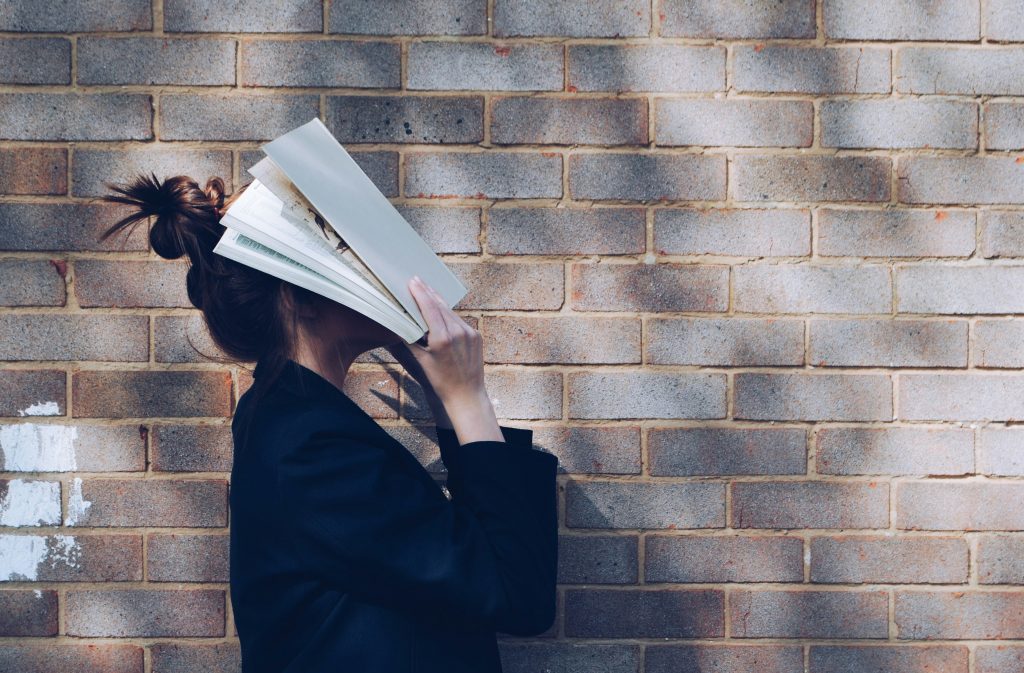

So, let’s hear it for study snacks. Short, random moments when a student challenges themselves to remember something. Adults can help and support them in this process as well as encourage them to develop it as a habit for themselves. Share with them the fact that this works and will help them with longterm recall. Apart from anything, it sends the message that study – like exercise – should be a part of daily life and woven into the fabric of your routine and habits. You don’t even need a desk to do it.