It is difficult for anyone outside the profession to comprehend the full potential gamut of horror that is the secondary-school cover lesson. Not only does it mean losing what is potentially your only free slot of time during that day, the reality of that cover lesson can be genuinely terrifying.

I recall opening up the cover folder and reading instructions such as the following:

“Explain to students the fundamentals of the carbon cycle”. Um. Okay.

“Invite students to share their views on …” (insert anything here, frankly, for horrors to commence).

“Go through the answers” – when this was Key Stage 4 maths, my blood truly ran cold with terror.

Yesterday, on the platform formerly known as Twitter, Andrew Old (who is a figure that will be known to anyone who does EduTwitter) asked the following: what is the worst cover lesson you have ever had to do? He followed this up with his own entries for the competition, saying that he was torn between an MFL lesson where the work was a wordsearch that didn’t actually have any of the words in, a science lesson where the work was “write a rap about the rock cycle” and “any PE cover where they actually had to play a sport”. The latter brought back a flash memory of one Year 9 tennis cover during my first year, during which I learnt a valuable lesson and a principle that I stuck resolutely to for the rest of my 21-year career: do not – repeat not – go into work with a hangover. You will be punished.

Others on the platform added their own entries to the competition and I share some of these experiences purely so that readers may appreciate just what it is that your average teacher may go through on a typical day. One reported a double-booked room and having to find another room with a class he did not know. One reported the radiator bursting during the session. Too many to account for reported simply diabolical situations that would try the patience of anyone who values their sanity (most of them involving either PE or Music), but I think my personal favourite was the following: “I received a cover sheet. The first instruction was: collect inflatable sheep from sports hall. I replied and said that I would not be covering this lesson”. I think I laughed for 5 minutes about that one.

The only other response I found that involved someone simply refusing to go ahead with a cover lesson was this one: “during my PGCE (first day of my first placement no less) I had to perform CPR on my mentor teacher after he suffered a cardiac arrest. I was asked to cover his lessons for the day after he was taken to hospital. After a pregnant pause I simply said no. I wish this was made up.”

These days, I get to hear about cover lessons from the students’ point of view, and in many ways their accounts are no less gruelling. Students that I work with who attend school in the state sector report teacher absences at a record high and last year I worked with several Year 11 students who had no teacher at all for the majority of the school year; one student was affected in this way in multiple subjects. In the private sector, recruitment and retention seems to be marginally better, but the absence rate remains significant and the quality of cover work an issue. The problem is always particularly acute in minority subjects, when the absence of the subject expert can create an insurmountable vacuum that nobody has the expertise to fill. This was a pressure I felt acutely as the sole Latinist in the school I used to work in. The one and only time in my entire career when I was genuinely too sick to set work (indeed I could not get out of bed and considered the need for medical help), my HoD rang me up to ask me what he should do. I understand, I really do, and it certainly brought home the need for some kind of emergency provision.

One of the things that has struck me since leaving the profession is how little attention most schools give to the inescapable reality of cover and how damaging this is to the student body. I recall school leaders talking about this but in a manner that simply seemed to emphasise how important our presence was in the classroom, not a manner that brought any practical solutions to the unavoidable fact that sometimes we will be absent. School leaders really do need to face up to the reality that every child in their school will face a significant number of cover lessons during every month – at times, during every week. Schools should have a clear and workable policy when it comes to the expectations for a cover lesson, and these expectations should also be shared and repeated as a mantra to the students. For example, one school I worked in had the rule that cover work must be something that students could complete independently and in silence; this was a great rule, but it would have been considerably more powerful if that rule were shared as an expectation with the students!

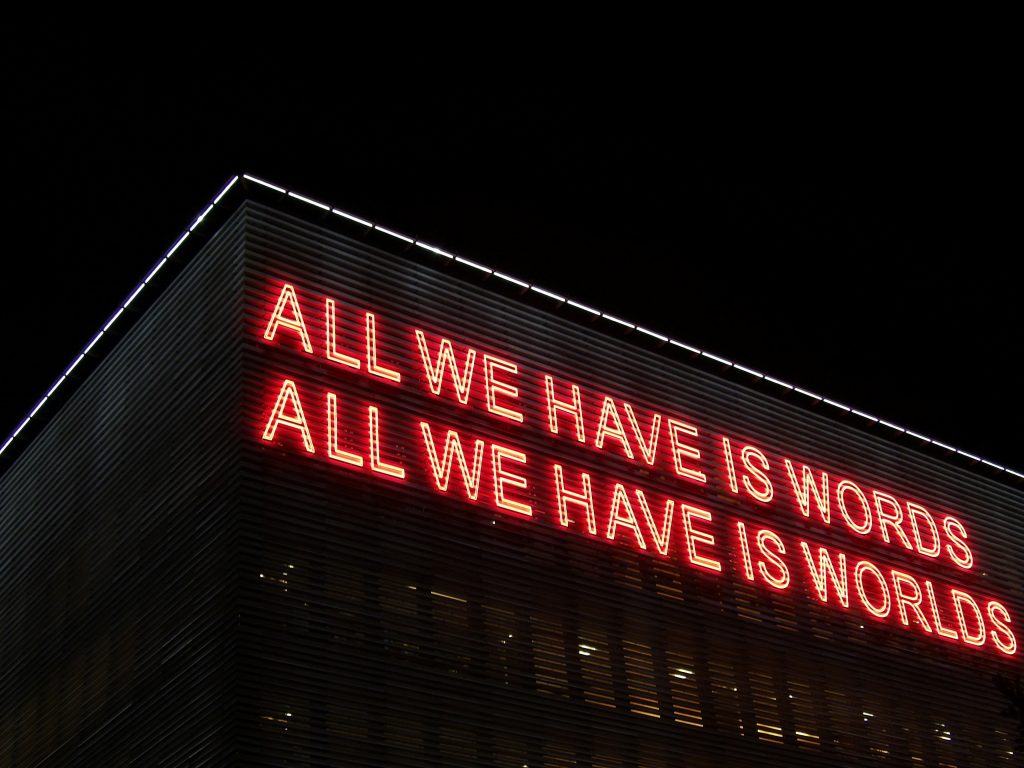

I realise – now that I am outside the white heat of the situation – how much more I could have done to prepare students for what to do in a cover lesson. I absolutely could have done this myself, although I maintain that it would have been much more powerful to make it a school-wide expectation and something that is displayed for all students to see. All learners could be instructed on what they should do in the absence of specific cover work: for example, learning material from their Knowledge Organiser. With a bit of effort to do the groundwork, this would make life so much easier both for classroom teachers when they end up sick and for those who are providing the cover.

As a professional tutor now, I cannot influence what happens in the classroom, but I can help to make that experience more profitable and worthwhile for the individual students that I work with. I discuss with them what they can and should do when their teacher is absent and many of them take these suggestions on board. There are so many things that a student can use spare time for, but most of them lack the initiative to make use of that time without explicit instructions and guidance. The students I work with always have something that they know we are rote-learning and I talk to them about making efficient use of any spare classroom time to test themselves on whatever it is we are working on. In languages, the list of what students need to commit to memory is pretty relentless, so no student should ever be left twiddling their thumbs: but they really do need it spelled out to them that this is what they should be doing with the time.